Stored Procedure error not failing parent Workflow

There's an issue I'm investigating: I have a workflow with a stored procedure executed as a post-load. It fails without failing the parent workflow. I've decided to come up with a simple test that would narrow down the cause.

Stored procedure

Here's the stored procedure I'll use for testing:CREATE PROCEDURE RaiseErrorTest AS

BEGIN

--PRINT 'started'

--SELECT 1

IF 1=1

BEGIN

RAISERROR ('The servers are busy at this time. Please try again later', 16, 1)

RETURN 1

END

PRINT 'Completed'

SELECT 2

END

All it does is perform the RAISERROR that should fail the whole process. There are also two disabled statements - we'll get to those later.

Workflow

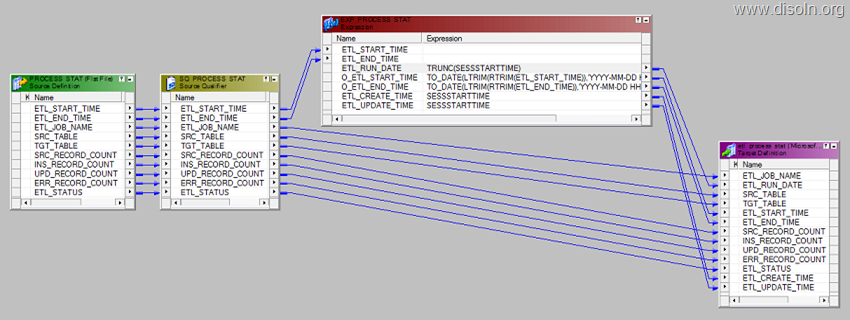

I've created a simple workflow that executes SELECT 1 on source and uses a FALSE filter, so nothing gets written to target. It also includes a Post-load stored procedure call to the above SP:Test 1: Native connector, disabled statements before raising error

Ok, lets start it and see what happens:Great, session has failed as expected raising the defied error. The workflow has failed.

Test 2: Native connector, enabled statements before raising error

Now, let's enable the two statements back:ALTER PROCEDURE RaiseErrorTest AS

BEGIN

PRINT 'started'

SELECT 1

IF 1=1

BEGIN

RAISERROR ('The servers are busy at this time. Please try again later', 16, 1)

RETURN 1

END

PRINT 'Completed'

SELECT 2

END

Test 3: ODBC, disabled statements before raising error

Let's revert the stored procedure to have disabled lines 3 & 4 and try the ODBC connection:

Test 4: ODBC, enabled statements before raising error

Now for the final test: using ODBC connection for the stored procedure that runs some statements before raising error:

Session (and worflow) executed successfully! Great, right? Well, not quite... The stored procedure fails but this is not escalated to PowerCenter.

Conclusion

While this is handled correctly by Native SQL Server connector, any first result set returned by the stored procedure fools the ODBC into treating this a successful execution. Whatever happens later on is discarded and you may never know your stored procedure failed.

Unfortunatelly I have no idea how to overcome this issue.